Copyright

This is gonna be kinda long, and I think we’re still gonna leave stuff out, because this whole thing has been a nonstop from the start.

Like, you look away for a second and suddenly there are three new AI models and two robots.

Feels like centuries have gone by, but in reality this technology has been around us for just a little while. Even though the precursors to Artificial Intelligence had already

been developed before and used in certain contexts for a long time, let’s stick to what we now popularly call “AI.”

It was in 2017 when the paper “Attention is All You Need” came out, laying the groundwork for models like ChatGPT.

Later, between 2018 and 2020, OpenAI released its first GPT models (1, 2 and 3). They were still pretty basic, but kept getting better at generating human looking text.

Then in 2021, image models like DALL·E started becoming popular.

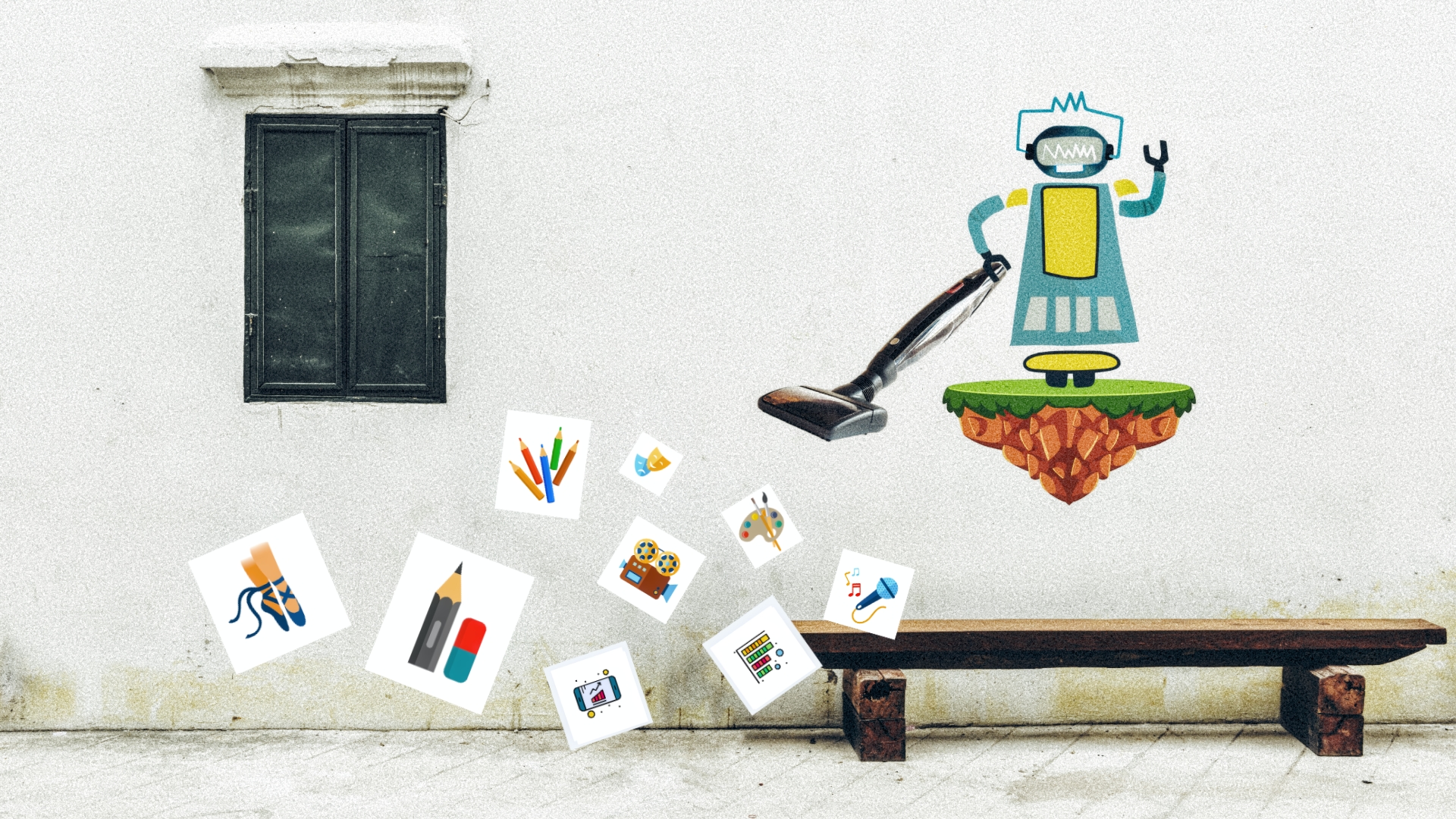

What’s funny is that, even though text models like the GPT showed up before, it was the image ones that got famous first. You could say they hooked us with the “pretty stuff.”

So, we get to the 2021–2022 period and everybody’s going nuts over these creatures.

They spit out endless images, endless texts, endless startups pop up… fantasy.

And that’s the thing with pretty stuff, that people get so fascinated by it that they don’t stop to ask themselves anything else like, how does it work? how was it made?.

For the record, back then we were also pretty fascinated with those models, since we had been following the topic and its evolution for years, so living through

that time felt like suddenly being in an Asimov book. It was fascinating.

Everything was about infinite experimentation and infinite possibilities, but when you start thinking about the possible consequences, you also start asking yourself how things

work, mostly so you can calculate them better. Because when something seems too good to be true…

We’re not experts in artificial intelligence, but as far as we’ve been able to study, not even the creators of AI models really have a clear idea of how they work.

For example, in this article from the University of Michigan, "AI's

mysterious “black box” problem, explained” , they talk about this paradox. Or in this text by the MIT,

“Large language models can do jaw-dropping things. But nobody knows exactly why.“

So they don’t know how they really work, right? Great, that makes building security systems super safe and easy.

Even so, and probably to get the product out as fast as possible, the official narrative has mostly focused on the benefits, with a kind of generic explanation that

seemed to convince everyone, because, who cares how pretty things work if they’re pretty?.

As an example of the official narrative and a summarized explanation, we could point to this Financial Times article “What is artificial intelligence and how does it work?” .

It’s fine to have a general explanation, we’re not saying everyone has to be a tech expert, but the curious thing is leaving out from the official explanation things

as fundamental as that other question that dims the shine of this lovely technology, that is, which data are AIs trained on?

To talk about this, we’re going to do it through a collection of articles, which we think will make it easier to understand.

From Time, “OpenAI used Kenyan workers making less than $2 an hour to make ChatGPT less toxic.”

From Wired, “Millions of workers are training AI models for pennies.”

From Rest of the World, “How big tech hides its outsourced African

workforce.” From The Guardian, “Companies

developing AI tech are using your posts. Here’s how to opt out.” From Forbes, “Is generative AI stealing from artists?”

Another one from Wired, “Apple, Nvidia,

and Anthropic used thousands of stolen YouTube videos to train their AI.” And another from The Guardian, “Reddit sues AI company

Anthropic for allegedly ‘scraping’ user comments to train a chatbot.”

And these are just a few examples, nice right? Massive data theft and semi-slave labor. (We seriously recommend reading the full articles. They’re really interesting and

everything makes a lot more sense.)

We haven’t even mentioned the ecological impact or the painfully permissive deregulation by governments that makes this possible. We’ll talk about that another time.

The thing is, all this information is public, but it doesn’t show up on TV, so… nothing, apparently nothing’s happening.

The world’s been a really weird place lately.

On one hand, you’ve got people on edge shouting to the rooftops, “Skynet’s coming!” On the other, the freshly minted AI “gurus” selling courses so you can learn to write

coherent phrases because, for some reason, the future depends on having a degree in writing coherent phrases. Some of the tech community crying out and about because robots

want to replace us, and the other part like a cult, crying out and about every time their model gets updated. People who decide to leave the internet entirely because it’s too

overwhelming and others who swear that AIs are their best friend (or their loving partner). A part of the artists community (a surprisingly small portion, but we’ll talk about

“artistic masochism” in another blog post, probably about the current state of live music) protesting because their intellectual property is being stolen and others pretending

not to notice. Politicians saying they want to regulate AI with their super laws but then letting tech companies do whatever the fuck they want, as long as they

reap the benefits of Big Data.

Have you seen the movie Airplane!? To each their own.

We should also talk a bit about the excuses people use to try to justify this massive theft.

Some people say, “AI only gets inspired, it doesn’t copy. And artists do that all the time.”

No, buddy, AI steals because that’s how it’s been trained, with stolen data. AI doesn’t get inspired like a human, it remixes existing works.

Others say, “AI-generated works are original.” Look, do you know how sampling and attribution work in music, for example? Covers? Legal originality isn’t

measured just by the final output, but by the source of the data. If AI training includes protected works without permission, then the process is already using

stolen intellectual property, even if the result seems new.

There’s also the main argument tech companies use to shield themselves when they get sued, which is that “AI transforms the original work and that legally counts

as fair use.” Copyright law is very strict, and using thousands or millions of protected works to train AI is not fair use, even if the final output is different.

The whole “Ghibli style” or “Simpsons style” thing, with that bit about “The original artist benefits because AI promotes their style.” Seriously? Sounds a lot

like the whole “paying with exposure” thing… Did you know Miyazaki cried when they started creating millions of images in his style? He felt super benefited, for sure.

And our favorite, the mother of all arguments, the ultimate “balls of steel” claim, that is “AI democratizes access to art.”

Look… there’s nothing worse off, worse paid or more undervalued than being an artist. We’ve been the damn “court jesters” throughout history, honey.

The troubadours, the fairground performers, the rich man’s ridicule, the king’s whim, the ones painting altarpieces for whatever Medici was in charge, no fuck.

The people behind “culture makes nations” but who end up being the last shit in every society. The funny, the fun, the party, the unserious. The whole “son, wouldn´t

you rather study law instead?” thing.

If it weren’t so offensive, it’d almost be funny.

What’s stopping you from picking up a pencil and some paper? What’s stopping you from singing, dancing, writing? Where is that “impenetrable barrier”

that turns artists into these otherworldly beings with magical powers, forcing you to rely on a tool trained on their work just to feel like an artist? As far

as I know, art is one of the most intrinsic parts of human nature there is.Give a kid some crayons and they’ll cover the hallway wall with a mural that would make

Michelangelo jealous.

And that whole “Why spend years practicing when I can do it in seconds?” thing? That’s because you don’t actually want to make art, and you know it. You grab a

device that blends the work of others and call it “creating,” but can you really feel proud of those “creations”? Do they add anything to you as a human being beyond

the dopamine hit from the likes you might snag? So, what do you really want, to make art or to the likes?

We get a lot of inspiration from science and sci-fi for the stuff we do but in everything we create, our own experience comes in, every little choice of a note

or a color over another, a word instead of another, which is the result of our life, our experiences and emotions, our learning… To create something you don’t just

use references or inspiration, or just want to tell a story, it’s a bit of all that and more.

It’s not about the result, it’s about the process. Expression happens during a process that’s alive.

“But I “create” the prompts.” Yeah, but you make them to feed a machine trained on other people’s art, so whatever comes out of it can hardly be more than that,

a remix of someone else’s work.

There’s another point we wanted to include about something related that happened recently and really caught our attention.

When Ozzy died (something we’re still processing, because it kinda felt like the end of an era or something. We will talk more about this some other time),

we saw tons of posts from people, bands and media using AI images to say their condolences.

If you tell me it’s because he was a private person, hard to find material on, or that using a real image could cause copyright issues we could even understand it (or not)

but Ozzy? That was basically part of humanity’s heritage, and there are millions of pics of him online. Someone explain why you’d use AI instead of just grabbing a real photo.

It feels like some kind of social shift we still can’t put a name on, something beyond just a trend. Why, when there’s a real image of someone or something,

do people pick one made with AI? Or even weirder, why take a real photo and tweak it to make it look AI generated?

We get the convenience of having infinite AI images, videos, sounds or text, but if you don’t have the skills or the budget, there are still millions of real,

free resources online. Pics, vectors, videos… there are tons of ways to make stuff.

Continuing with the copyright thing, in our last blog post, where we talked about why we left Spotify (you can read it here) we already mentioned that maybe not everything goes.

Where are we now with this copyright stuff? Well, we’re gonna tell you with another collection of articles.

From the New York Times, “Anthropic agrees

to pay $1.5 billion to settle copyright infringement lawsuit.”

From Associated Press, “Warner Bros sues Midjourney.”

From Reuters, “Perplexity AI

loses bid to dismiss or transfer News Corp copyright case”

From Music Tech Policy, “AI

Implications of Spotify’s Updated Terms of Use: Your Data is their New Oil”.

And this last one, which we find especially disturbing, because WTF, from CNN “Denmark

plans to thwart deepfakers by giving everyone copyright over their own features ” Crazy stuff.

These news collections are just a few examples, there’s more and when you see it all together, what it makes us think is that if AIs were really as amazing as

they say, this stuff wouldn’t be happening. And also that, unfortunately, the first choice of human beings is still to rely on exploitation under the excuse of progress.

We’ve mentioned it before, but we think it’s not the technology’s fault, it’s the ethics behind it. A tool is a tool, and technological and scientific progress

can give us wonderful things, but also terrible ones. It all depends on how and who handles it.

And we’re back to the whole “pretty” thing, because while massive amounts of data are being stolen to give us these toys, at the same time it’s also being

collected for much less pretty stuff, like what’s happening in the UK with

the Online Safety Act or

what’s being proposed in Europe with the Chat Control law.

When you combine massive data collection and AI, a lot of things can be done, not just Ghibli style images.

Crazy idea, what if all this is more like Big Brother, mass control and an Orwellian like society? Because if they’re stealing our data and the “masses”

don’t complain… I don’t know, they could go even further with other stuff, right?

AI ain´t magical, they are machines, trained on data. Our data.

And finally, we want to insist in that none of this is inevitable. Inevitable is death, inevitable is the sun rising in the morning, but all of this is

a human construct and, as such, it can be improved, questioned and chosen.

It’s up to us.

So meanwhile, you know, Dream, Create, Rebel.

Join us on